At a time when many school districts are eager to expand their corps of effective principals, many principal preparation programs are considering how to improve the training that shapes future school leaders. One survey of university-based training programs found, for example, that well over half of respondents planned to make moderate to significant changes in their offerings in the near future.

Enter Quality Measures, a self-study tool meant to allow programs to compare their courses of study and procedures with research-based indicators of program quality, so they can embark on upgrades that make sense.

Enter Quality Measures, a self-study tool meant to allow programs to compare their courses of study and procedures with research-based indicators of program quality, so they can embark on upgrades that make sense.

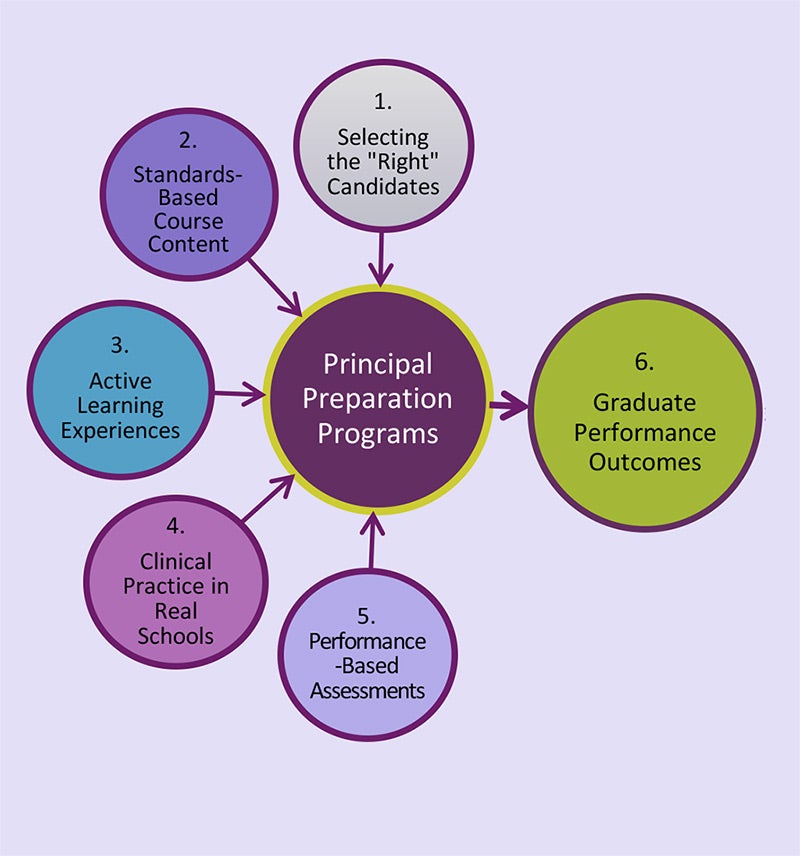

Specifically, Quality Measures assesses programs in six domains: candidate admissions, course content, pedagogy, clinical practice, performance assessment, and graduate performance outcomes. With an accurate picture of their work in these areas, programs can start planning the right improvements.

The tool was first rolled out in 2009, and its 10th edition was recently published. It reflects new research and such developments as the 2015 release of the Professional Standards for Educational Leaders, a set of model standards for principals. Given all this, now seemed a good moment to engage with the Education Development Center’s Cheryl King, who has led the development and refinement of Quality Measures over the years. Below are edited excerpts of our email Q&A.

Why is quality assessment important for principal prep programs?

In our work with programs, we find that the practice of routine program self-assessment is viewed positively by most participating programs. It provides a non-threatening way for programs to connect with the literature on best training practices and to consider how their programs compare.

Additionally, users tell us that having a set of standards-based metrics—which clearly define empirically-based practices that produce effective school leaders—provides them with timely and actionable data. This can be translated into change strategies.

That was the case when faculty members from four programs discovered a common weakness in their admissions procedures. Using Quality Measures together, they saw that all four programs lagged when it came to using tools designed to assist in predicting the likelihood of an applicant being the “right” candidate for admission to the preparation program. They then began to identify and exchange tools that currently exist, later determining what might be useful in helping them to better assess candidate readiness for principal training.

It has been our experience that assessment cultures based on solving persistent and common problems of practice are far more effective than cultures clouded by fears of penalties as a result of external evaluation.

What are the one or two most common areas of improvement for programs pinpointed by Quality Measures?

Domains are typically identified as needing improvement based on a program’s inability to provide strong supporting evidence. Domain 6, graduate performance outcomes, has been consistently identified by programs using Quality Measures as an area in need of improvement. Commonly cited reasons identified by programs include lack of access to school district data about their graduates’ post-program completion.

Take the four programs I mentioned. On a scale of 1 to 4, with “1” the lowest and “4” the highest, their average score was 1.5 in their ability to get information on things like their graduates’ rate of retention when placed in low-performing schools or their graduates’ results in job performance evaluations. On the other hand, the programs were fairly successful (an average rating of “3”) in getting needed data about how their graduates fared when it came to obtaining state certification.

Another domain commonly identified across programs as needing improvement is Domain 5, performance assessment. The revised indicators in the updated tool call for more rigorous measures of candidate performance to replace traditional capstone projects and portfolios. We are finding that the more explicit criteria in the 10th edition are challenging programs to think in exciting new ways about candidate performance assessment.

Where are programs typically the strongest?

Programs typically rate Domains 2 (course content), 3 (instructional methods), and 4 (clinical practice) as meeting all or most criteria. Programs share compelling supporting evidence with peers in support of these higher ratings. They offer several explanations, including the recognition that these are the domains that typically receive the majority of their time and resources.

The inclusion of culturally responsive pedagogy as a new indicator in the 10th edition is among a number of additions to these three domains, based on the newly published Professional Standards for Educational Leaders. New indicators and criteria present new demands on programs that have exciting improvement implications for preparation programs.

In updating the tool, what did you find surprising?

One thing that greatly struck us was the increased attention being paid to the impact of candidate admission practices on the development of effective principals. Similarly, recent empirical findings about pre-admission assessment of candidate dispositions, aspirations and aptitudes as predictors of successful principals were compelling. We immediately revised Domain 1—candidate admissions—to incorporate them.